Why Raw Alerts Slow Down Teams

If you work in security, you already know the problem: you don’t need more alerts. You need to know what matters, why it matters, and what to do next. That’s what people mean by actionable threat intelligence. It’s not more data… It’s clarity.

Raw findings stall execution by forcing teams into guesswork, diverting time and focus away from decisive action.

Effective action requires a clear foundation:

- Confirmation: Is this real, or just noise? If it’s unclear, it gets ignored, or debated endlessly

- Urgency: How serious is it right now? A finding should tell you whether it’s harmless, suspicious or actively impacting customers.

- Ownership: Who is responsible for it? External issues often sit between security, fraud, legal, comms and IT, so progress stalls without a single owner

- Next step: What should we do next? If the finding doesn’t point to an action, It becomes another item in the queue rather than being resolved.

If this doesn’t happen, the result is predictable: false positives pile up, trust in the feed drops, and the serious threats go unseen and buried in the noise.

What “Context” Looks Like in Real Life

When people say “add context,” they don’t mean a longer report. They mean the few details that turn a finding into a decision. Context answers the same questions every time:

What is it? How serious is it? Who is it affecting? What should we do next?

Here’s what that looks like across common external threats:

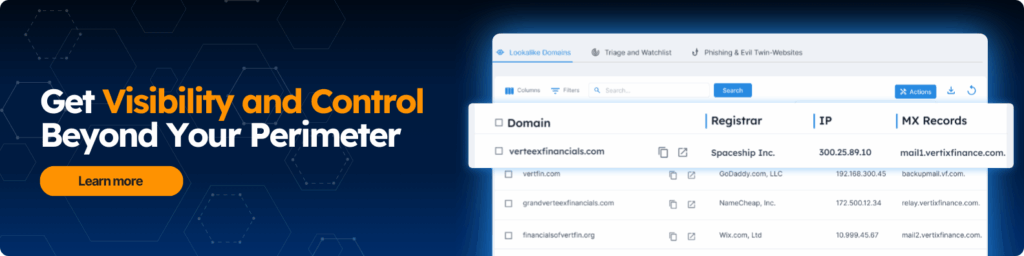

1. Domains (lookalike sites, phishing pages, spoofed domains)

- What it is: Is this a harmless lookalike registration, or a site pretending to be your brand?

- What it can do: Can it collect logins, take payments, or send people to a fake support channel?

- How active it is: Is anyone actually visiting it, sharing it, or linking to it right now?

- Why you should care: If it’s live and customer-facing, it can cause fraud fast — and the longer it stays up, the more victims it creates.

2. Impersonation (fake social profiles, fake executives, fake customer support)

- What it is: Is this someone copying your name and logo, or an account actively pretending to be “official”?

- What it’s doing: Is it posting scams, replying to customers, or acting like a support team?

- Who it’s targeting: Is it aimed at customers, employees, investors, or job seekers?

- Why you should care: Impersonation is more than a “brand issue.” It’s often the front door to fraud, phishing, and account takeovers.

3. Leaked data (credentials, customer data, internal files, card details)

- What showed up: Are we talking about passwords, customer records, internal files, or payment data?

- Who is impacted: Is it tied to employees, executives, customers, or a specific business unit?

- What attackers can do with it: Could this lead to account takeovers, phishing, scams, or regulatory exposure?

- Why you should care: A leak becomes a real incident when it creates a clear next move for an attacker — and that window can be short.

When your intel answers those four questions upfront, it stops being “another alert” and becomes something the team can actually use. You cut the back-and-forth, you reduce noise, and you move faster on the items that can actually harm customers or the business.

Severity Should Change Based on Activity and Intent

You already know that not every external finding deserves the same level of attention. The mistake teams make is treating everything as “high risk” or everything as “low confidence.”

Severity should move up or down based on two simple signals: activity and intent.

Activity answers: Is this actually being used right now?

Intent answers: Is this clearly meant to mislead, steal or damage trust?

Here’s what that looks like in plain terms:

- A lookalike domain

- Low severity: It exists, but there’s no real sign it’s being used. No customer-facing scam, no campaign, no visible impact.

- High severity: It’s being used to impersonate your login, your support flow, your payment process, or it’s already drawing traffic and confusing people.

- A fake social profile

- Low severity: The profile exists, but it’s inactive or has no real reach.

- High severity: It’s posting, commenting, messaging, running “support” conversations, or copying leadership content in a way that pushes people toward a scam.

- Leaked credentials or sensitive data

- Low severity: Old exposure with limited relevance, or data that doesn’t lead to immediate access.

- High severity: Fresh exposure tied to active access, fraud risk, or anything that can be used quickly to break trust (customer data, login details, payment info, internal files).

This is why “severity” can’t be a fixed label. It should reflect what’s happening right now and what the attacker is trying to achieve. When you rate findings this way, teams stop arguing about what-ifs and start aligning on the same question: Is this about to cause harm, or is it something we can track and handle calmly?

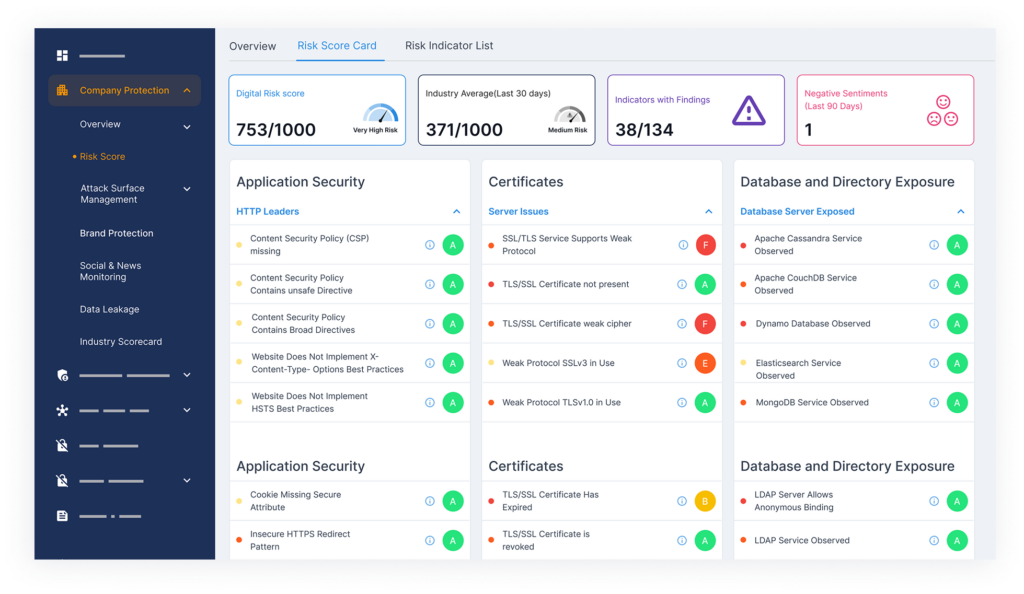

Once you add context, the next challenge is scale. A team can’t manually evaluate every domain, impersonation, or leaked credential. That’s where risk scoring comes in: it’s how you turn context into a consistent, repeatable way to prioritize what matters first.

What Risk Scoring Should Do and What it Should Not Do

Once you accept that context changes severity, the next step is making that repeatable at scale. That’s what a risk score is for. Not to “prove” something is malicious, but to help a team decide what deserves attention first.

A good score should do a few things really well:

- Help you rank your work: When you have 100 findings, the score should make it obvious which five you look at first.

- Explain itself: A score is only useful if it comes with the “because.” People need to see what’s driving the risk so they can trust it.

- Move as the situation changes: If activity spikes or stops, the score should change with it. That’s the whole point of watching external threats in real time.

- Point to an action: The best scoring systems don’t just label risk. They help route it: investigate, monitor, escalate, takedown, notify, or close.

What a risk score should not do:

- It should not be a black box: If the team can’t tell why something is “high,” they won’t use it. They’ll go back to manual triage.

- It should not replace judgment: The score should speed up decision-making, not pretend to make decisions for you.

- It should not create fake precision: “92 vs 89” doesn’t matter. Buckets and clear reasons matter.

- It should not push everything into high severity: If everything is urgent, nothing is. If a score triggers too often, teams start ignoring it.

Remember, the goal is simple: reduce debating and increase motion.

A score should make the first decision easier, not become another thing the team argues about.

How Prioritization Ties to Business Impact

Prioritization only works when it maps to outcomes the business actually cares about. Otherwise, teams end up arguing about labels like “high” and “medium” instead of answering the real question: what happens if we do nothing?

A good workflow connects every finding to one of a few clear business impacts:

- Money at risk: Will this lead to fraud, chargebacks, wire scams, fake invoices, or stolen card data?

- Access at risk: Will this lead to account takeover, credential stuffing, or a breach through stolen logins?

- Trust at risk: Will customers lose confidence, churn, flood the support team, or believe something false about your brand?

- People at risk: Are executives or employees being targeted, impersonated, or harassed in a way that could escalate?

- Legal and compliance risk: Is this creating reporting obligations, regulatory exposure, or brand misuse that needs legal review?

Moreover, this is also how you stop findings from bouncing between teams.

When the impact is clear, ownership becomes obvious:

- If it’s fraud = fraud and security move first.

- If it’s customer trust = comms and support need context.

- If it’s legal exposure = legal needs the evidence trail and a decision window.

- If it’s credentials or access = IT and security need to rotate, revoke, or monitor immediately.

The best prioritization systems don’t just say “this is severe.” They say why it’s severe in plain language: “This is likely to cause customer loss or fraud within hours,” versus “This is worth tracking, but it’s not driving harm right now.”

That’s the difference between a score that gets acted on and a score that gets ignored.

Closing Thoughts

Actionable threat intelligence is not about collecting more alerts. It’s about turning external signals into clear decisions. You should be able to know what’s real, what’s urgent, who owns it, and what happens next.

When context and prioritization are built into the workflow, teams stop chasing noise and start resolving issues much quicker, before fraud spreads, trust drops, or customers get scammed.

Want to see what actionable intelligence looks like in practice?

Styx Intelligence helps you turn external risk signals into prioritized, evidence-backed actions across security, fraud, legal, and brand teams.